I often find myself humming some made-up tune to the gentle whir of a room’s machinery in the background of my consciousness. What would happen if that whir became more pronounced, and the room started singing its own tune?

To accomplish this, I must do a few things:

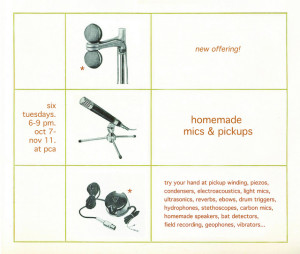

1. Pick up the noise in a room with a microphone (the kind of which is undetermined)

2. Analyze the sound to determine the room’s base frequency. Continue analyzing that sound to determine if/when that frequency changes.

3. Create a never-ending tune from based upon the base frequency.

4. Send that tune into the room as unobtrusively as possible, to make it seem like the room itself is singing.

1. Pick up the noise in a room with a microphone (the kind of which is undetermined)

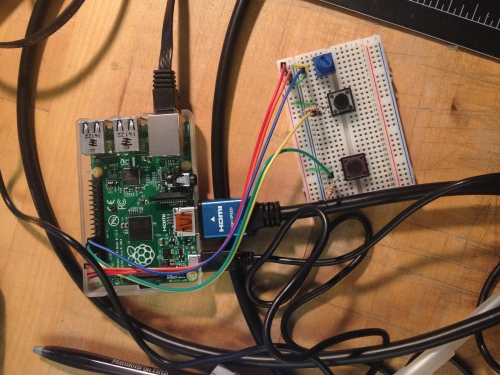

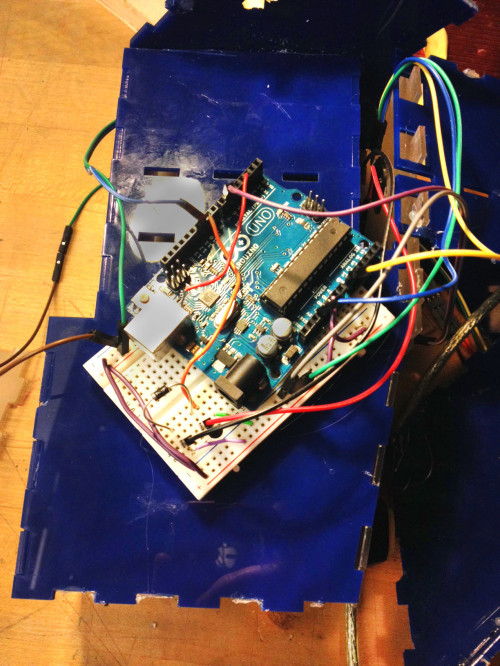

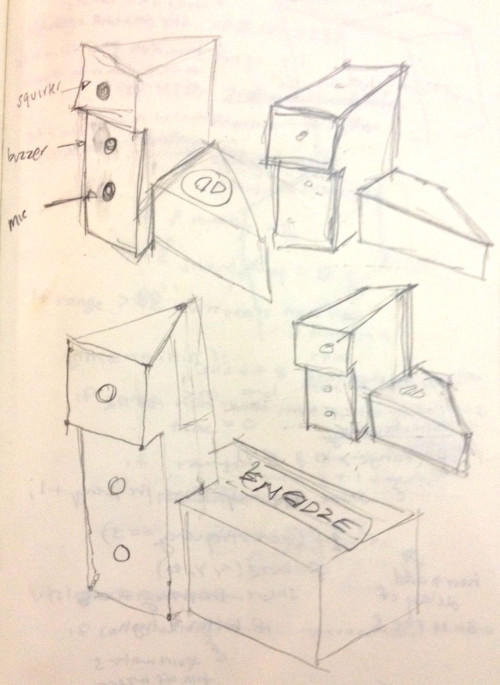

An [electret mic](https://en.wikipedia.org/wiki/Electret_microphone) is my microphone of choice in this case. The one I’m using from [Adafruit](https://www.adafruit.com/products/1063) is pretty good, and very easy to use. Sound has always been this mystical, mysterious thing, but over the past year or so, it’s all coming together – and it’s all a lot simpler than I was expecting.

2. Analyze the sound to determine the room’s base frequency. Continue analyzing that sound to determine if/when that frequency changes.

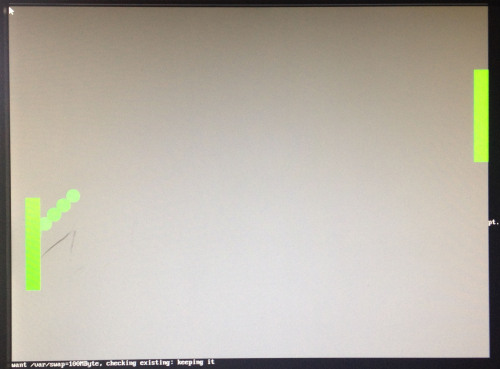

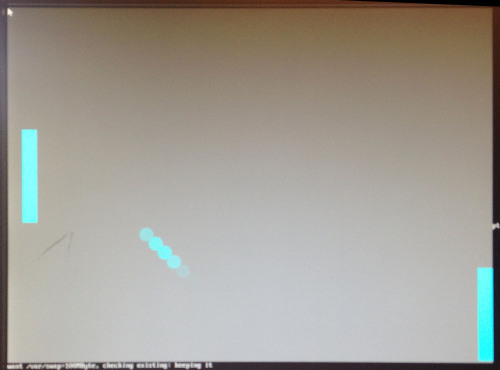

An FFT algorithm helps compute the amplitude of all frequencies of sound wave getting picked up by the microphone. The one I’m using splits the audible range into 64 bins of 75hz ranges each.

3. Create a never-ending tune from based upon the base frequency.

Via [OSC](https://en.wikipedia.org/wiki/Open_Sound_Control) I can send the FFT-derived base-frequency to a Raspberry Pi running [Pd-extended](http://puredata.info/downloads/pd-extended). With PD, tone generation is as simple as connecting a few nodes, and song generation is just a little bit more complicated than that.

A series of specific whole-number ratios multiplied by a frequency result in natural harmonies: for example, the base-frequency times five-fourths results in a Major Third above the base; fifteen-eights is a Major Seventh.

Using this knowledge in combination with a basic chord progression and a little randomness, I can create a never-ending song that perpetually realigns itself to the incoming frequency.

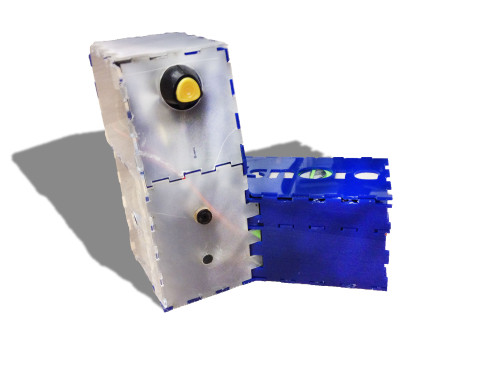

4. Send that tune into the room as unobtrusively as possible, to make it seem like the room itself is singing.

There isn’t much to show here, and that’s kind of the whole point. I’ve embedded my system into the ventilation vents in the floor below. A [surface transducer](https://www.adafruit.com/products/1784) (speaker without a cone) transfers the amplified music to highly reverberant metal air ducts.