LAYERd

LAYERd is a multi-layer display made from off-the shelf computer monitors. It allows for glasses-free 3D as well as a novel way to envision User Interfaces.

Every LCD screen on the planet is made of two main parts: a transparent assembly (made of laminated glass, liquid crystal, and polarizing filters) and a backlight. Without the backlight, an LCD monitor is actually completely transparent wherever a white pixel is drawn.

LAYERd uses three of these LCD assemblies illuminated by a single backlight to create a screen with real depth: objects drawn on the front display are physically in front of objects drawn on the rear ones.

My work is mainly focused on the potential UI uses for such a display: what can one do with discrete physical layers in space?

PROCESS

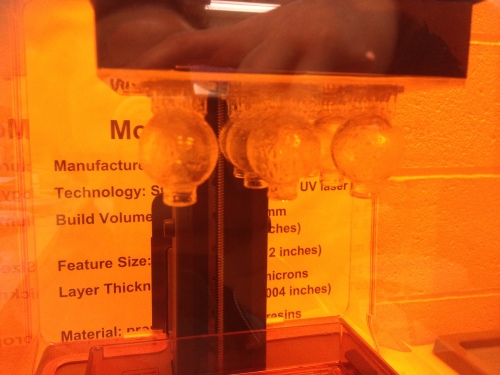

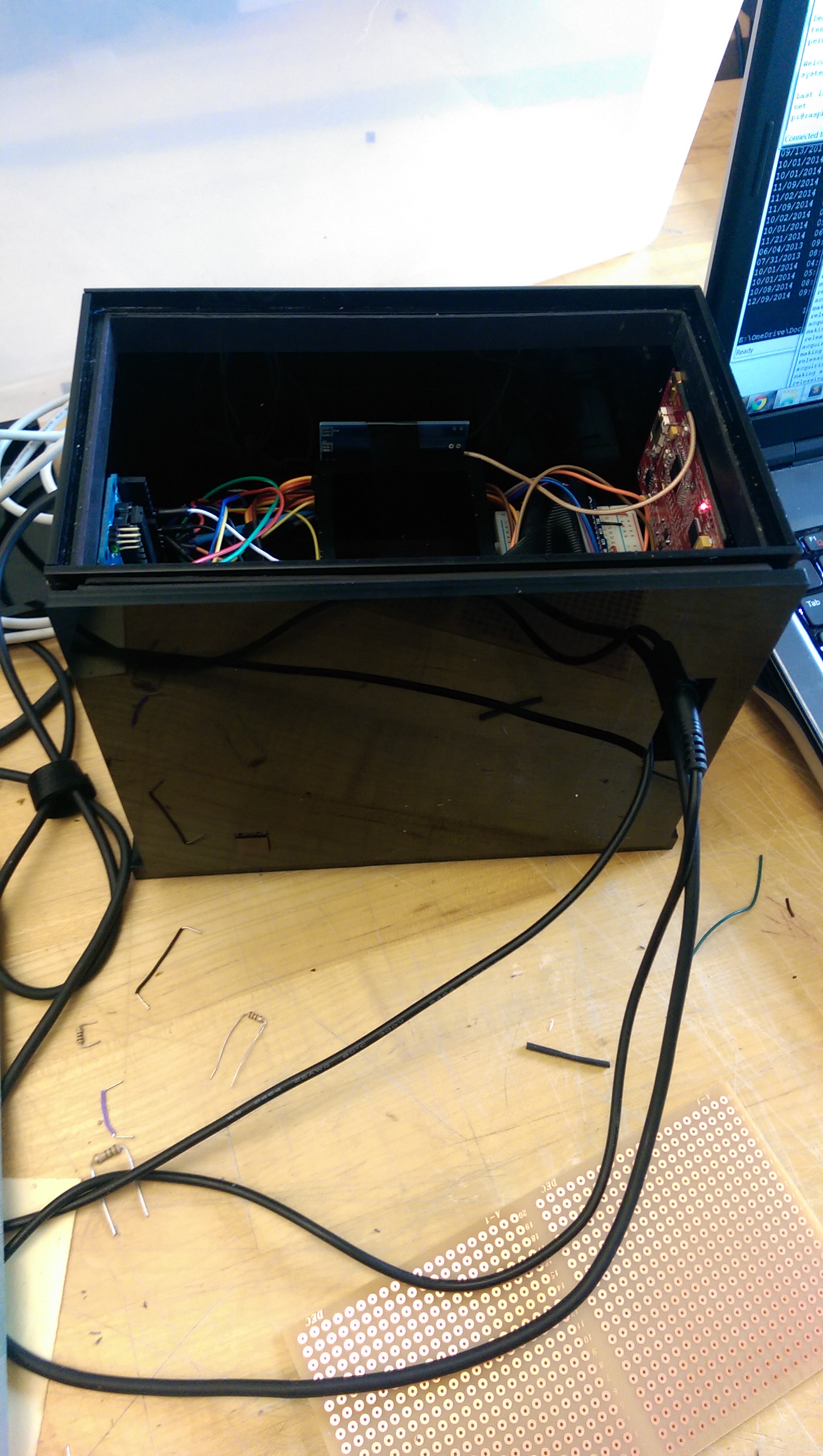

The process begins by disassembling the three monitors. After destroying two cheaper ones with static electricity before the project began in earnest, I was very careful to keep the delicate electronics grounded at all times, and I worked on top of an anti-static mat and used an anti-static wristband when possible.

With some careful prying, the whole thing came undone.

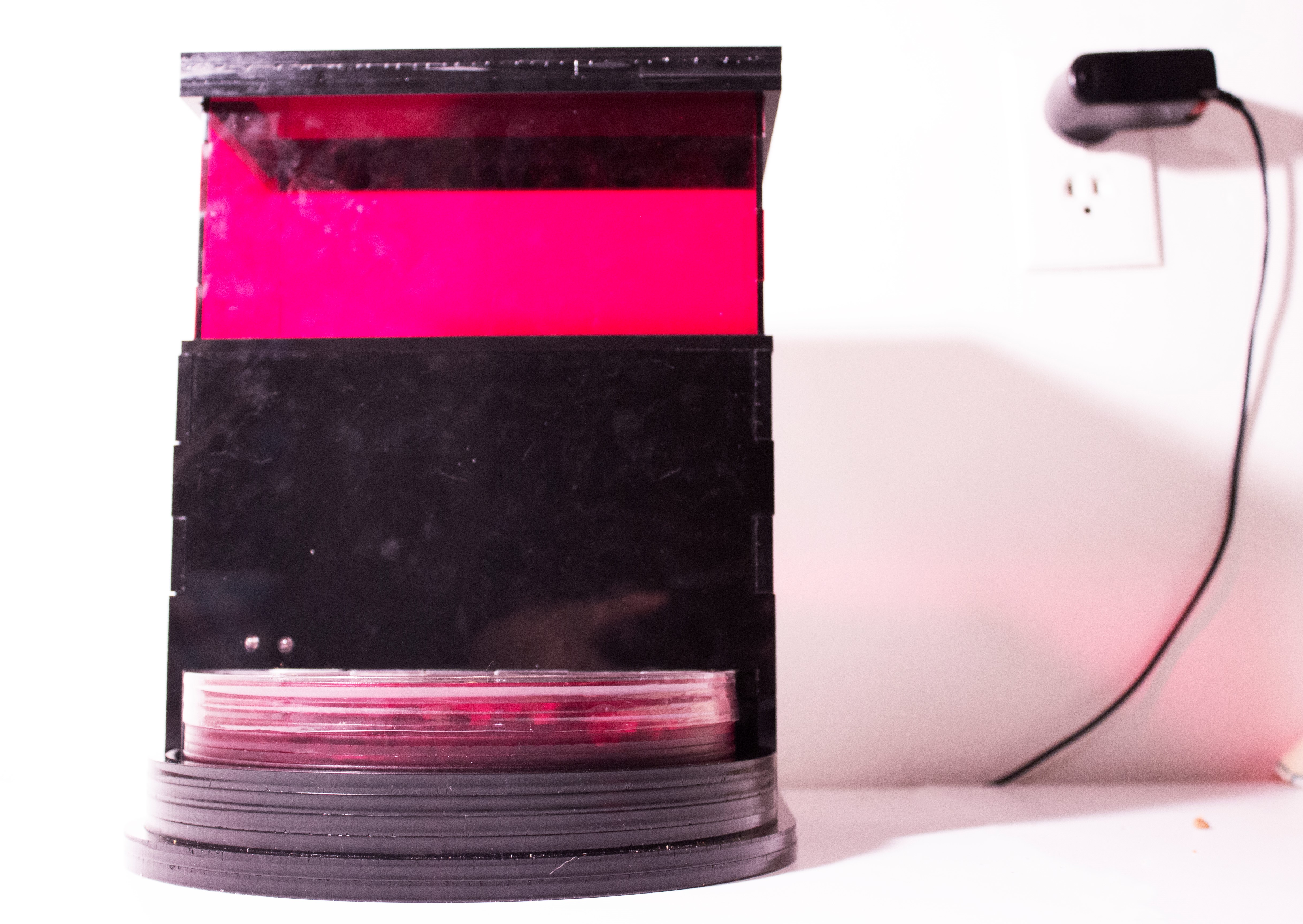

Here, you can see how the glass panel is transparent, and how the backlight illuminates it.

—

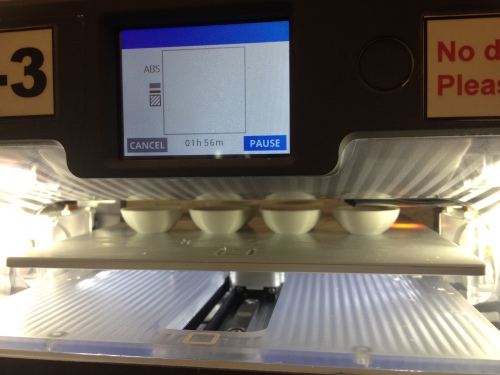

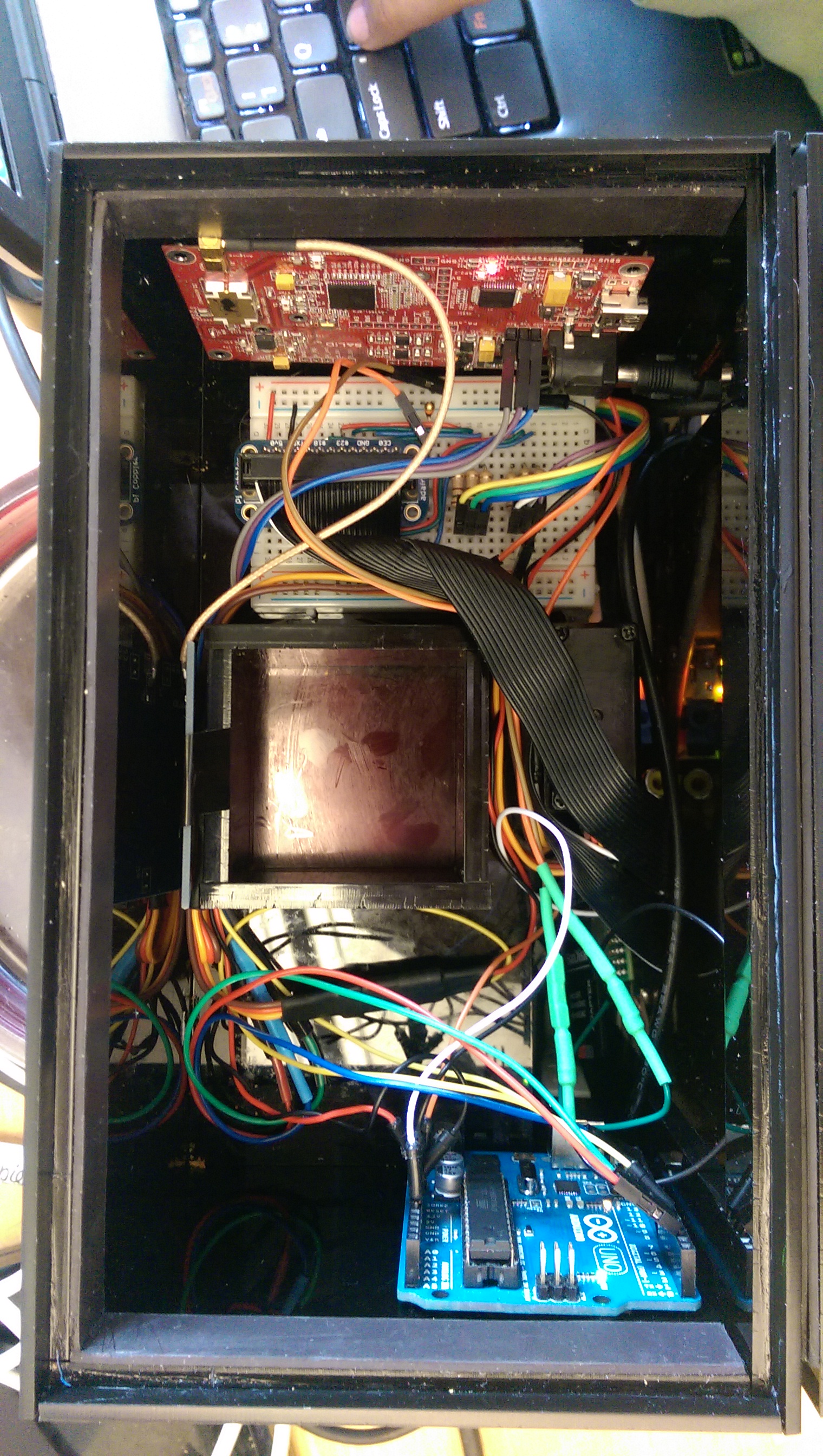

After disassembly came the design, laser cutting, and assembly of the frames and display.

—

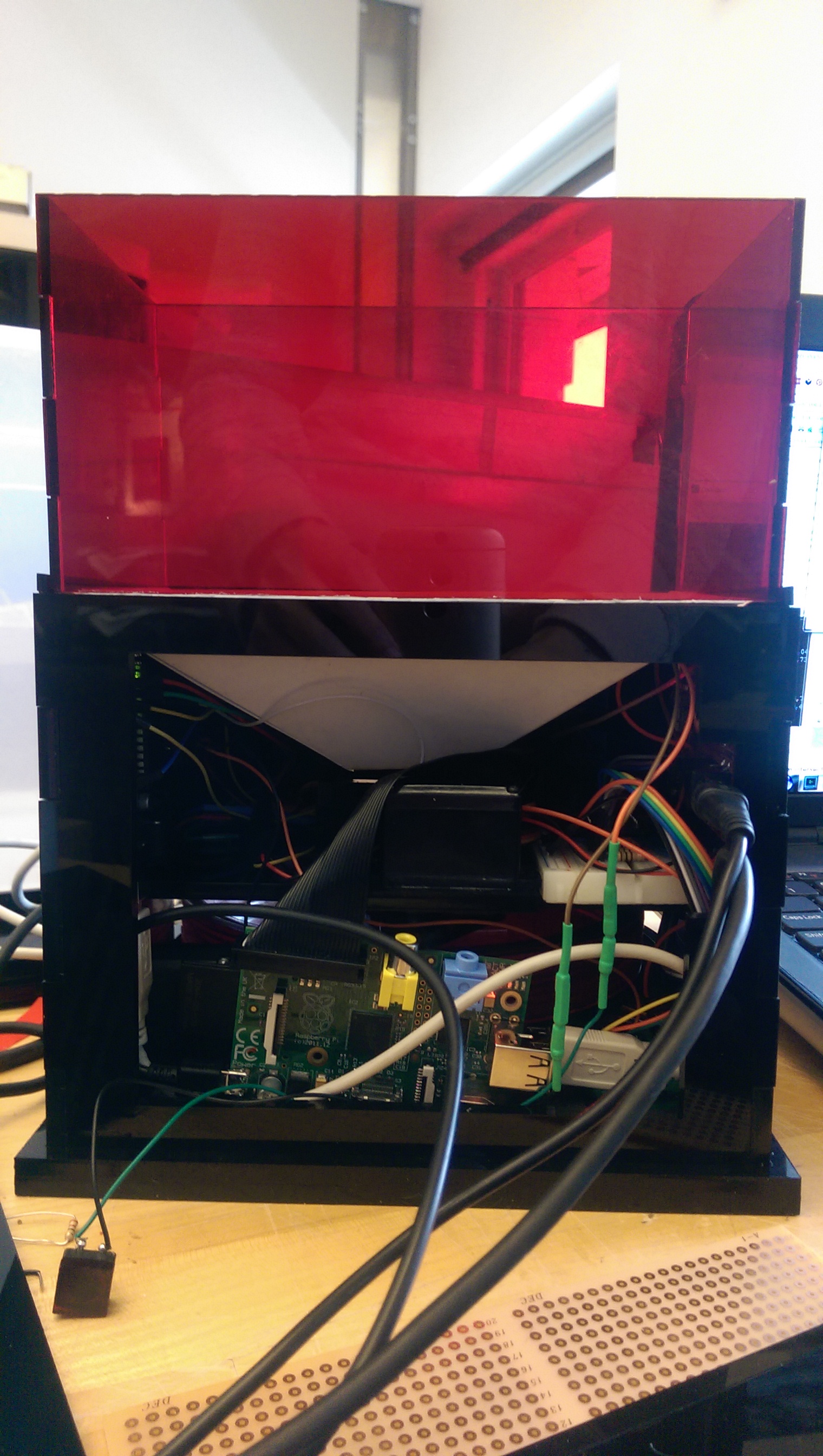

Finally, the finished product.

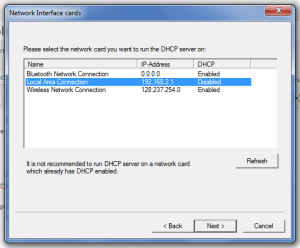

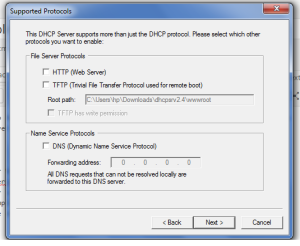

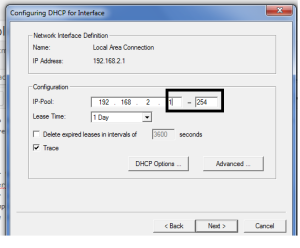

It uses three networked Raspberry Pis to keep everything in sync, as well as the power supplies/drivers from the disassembled monitors.

LESSONS LEARNED

I learned a lot about polarization; mainly that about half of the films needed to be removed in order for light to pass through the assembly. Plus, this cool little trick with polarized light.

—

I also learned about safety/how monitors work: Alas! Disaster struck. I accidentally cut one of the ribbons while disassembling a monitor, which resulted in a single vertical stripe of dead pixels. Plus, my front display got smashed a little bit on the way to the gallery show, and made a black splotch.

RELATED WORK

One main body of research highly influenced my design and concept: the work being done at MIT Media Lab’s Camera Culture Group, notably their research in Compressive Light Field Photography, Polarization Fields, and Tensor Displays.

Their work uses a similar assembly of displays to mine, but is focused mainly on producing glasses-free 3D imagery by utilizing Light Fields and directional backlighting.

A few other groups of people have also done work in this field, namely Apple Inc., who has a few related patents — one for a Multilayer Display Device and one for a Multi-Dimensional Desktop environment.

On the industry side of things is PureDepth®, a company that produces MLDs® (Multi Layer Displays™). There isn’t much information in the popular media about them or their products, but it seems like they have a large number of patents and trademarks in the realm (over 90), and mainly produce their two-panel displays for slot and pachinko machines.

Another project from CMU is the Multi-Layered Display with Water Drops, that uses precisely synced water droplets and a projector to illuminate the “screens”.

REFERENCES

Chaudhri, I A, J O Louch, C Hynes, T W Bumgarner, and E S Peyton. 2014. “Multi-Dimensional Desktop.” Google Patents. www.google.com/patents/US8745535.

Lanman, Douglas, Gordon Wetzstein, Matthew Hirsch, Wolfgang Heidrich, and Ramesh Raskar. 2011. “Polarization Fields.” Proceedings of the 2011 SIGGRAPH Asia Conference on – SA ’11 30 (6). New York, New York, USA: ACM Press: 1. doi:10.1145/2024156.2024220.

Prema, Vijay, Gary Roberts, and BC Wuensche. 2006. “3D Visualisation Techniques for Multi-Layer DisplayTM Technology.” IVCNZ, 1–6. www.cs.auckland.ac.nz/~burkhard/Publications/IVCNZ06_PremaRobertsWuensche.pdf.

Wetzstein, Gordon, Douglas Lanman, Wolfgang Heidrich, and Ramesh Raskar. 2011. “Layered 3D.” ACM SIGGRAPH 2011 Papers on – SIGGRAPH ’11 1 (212). New York, New York, USA: ACM Press: 1. doi:10.1145/1964921.1964990.

Wetzstein, Gordon, Douglas Lanman, Matthew Hirsch, and Ramesh Raskar. 2012. “Tensor Displays: Compressive Light Field Synthesis Using Multilayer Displays with Directional Backlighting.” ACM Transactions on …. alumni.media.mit.edu/~dlanman/research/compressivedisplays/papers/Tensor_Displays.pdf.

Barnum, Peter C, and Srinivasa G Narasimhan. 2007. “A Multi-Layered Display with Water Drops.” www.cs.cmu.edu/~ILIM/projects/IL/waterDisplay2/papers/barnum10multi.pdf.

Lanman, Douglas, Matthew Hirsch, Yunhee Kim, and Ramesh Raskar. 2010. “Content-Adaptive Parallax Barriers.” ACM SIGGRAPH Asia 2010 Papers on – SIGGRAPH ASIA ’10 29 (6). New York, New York, USA: ACM Press: 1. doi:10.1145/1866158.1866164.

Mahowald, P H. 2011. “Multilayer Display Device.” Google Patents. www.google.com/patents/US20110175902.

Marwah, Kshitij, Gordon Wetzstein, Yosuke Bando, and Ramesh Raskar. 2013. “Compressive Light Field Photography Using Overcomplete Dictionaries and Optimized Projections.” ACM Transactions on Graphics 32 (4): 1. doi:10.1145/2461912.2461914.

—

This project was supported in part by funding from the Carnegie Mellon University Frank-Ratchye Fund For Art @ the Frontier.