Mano Extended – exploration

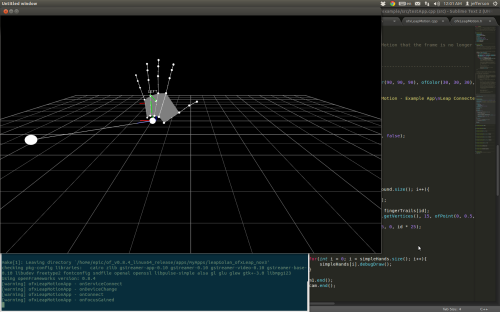

Parsing the stream of data from the Leap motion proved no easy task more me. Although I used Golan’s Leap Visualizer based on Theo’s ofxLeapMotion addon for openFrameworks. Once I got the data parsed and converted into OSC messages, it was time to get the OSC messages routed in pure data and mapped to the appropriate synthesis parameters. Here I present a few exploratory interactions.

I’m completely aware that these sounds aren’t pleasant. Since this is a long term project, I’m now in the phase of finding which gestures I like for controlling which style of synthesis, and this is a slow process. Although, these initial explorations provide good insight to the controller’s capabilities and it’s flaws.

Sometimes the Leap gets confused and decides that your right hand is actually your left, and it can only see from a specific angle, so any actions you make where you hands cross will probably confuse it, big time. This is called occlusion.

I fully intend to continue exploring this device for future work and I’m considering using it for my Master Thesis project, yet to be revealed ;).

This project was supported in part by funding from the Carnegie Mellon University Frank-Ratchye Fund for Art @ the Frontier.

I also include an annotated portfolio of research I’ve done for Aisling Kelliher’s Interaction Design Seminar on hand-gesture based systems for controlling sound synthesis.