Phase

Phase Preview from Dan Russo on Vimeo.

Concept:

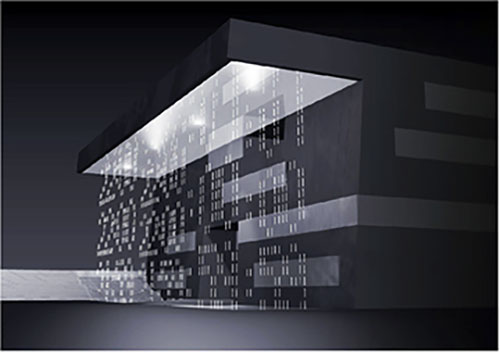

The concept for this project started as an exploration into the physicality of mathematics and geometry. These are concepts that are largely used, explored, and taught with on screen software environments. The direct relationship that these operations hold with computation, make an excellent and effective pair. However, the goal of this project was to take these abstract and more complex processes and bring them into the real world with the same level of interactivity they had on screen. By combining digital technology with transparent mechanics, exploration could happen in a very engaging and interactive way.

Phase:

Phase works by mechanically carrying two perpendicular sign waves on top of each other. When the waves are in sync (frequency) with each other, the translated undulation in the weights below is eliminated. When the two waves become asynchronous, the undulation becomes more vigorous. The interaction to this piece is mediated by a simple interface that independently controls the frequency of each wave. When the sliders are matched, the undulation becomes still, and when they are varied, the undulation becomes apparent.

Related Work:

Rueben Margolin

Reuben Margolin : Kinetic sculpture from Andrew Bates on Vimeo.

Rueben Margolin’s work uses mechanical movement and mathematics to reveal complex phenomena in a fresh accessible way. The physicality of his installations were the inspiration for this project. These works set the precedent for beautiful and revealing sculptures, but the separating goal of phase was to bring an aspect of play and interaction to this type of work.

Mathbox is a programming environment for creating math visualizations in web gl. This environment is an excellent tool for visualizing complex systems. Phase seeks to take this visual way of explanation and learning, and apply to a physical and tactile experience.

Lessons Learned: (controlling steppers)

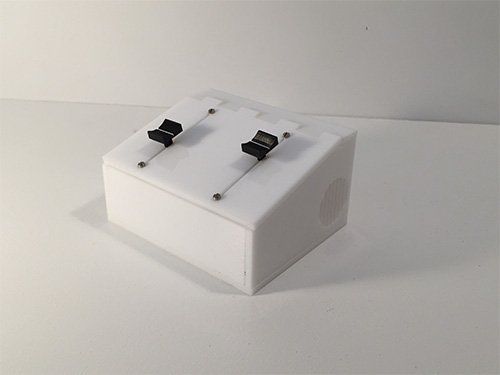

Stepper motors are a great way to power movement in a very controlled and accurate way. However, they can be really tricky to drive and work with. Below is a simple board I made that can be used to drive two stepper motors on one arduino. The code is easy to pick up via the accel stepper library, so this board will clear up a lot of the challenging hardware issues so you can get up and running very quickly.

Things you need:

+Pololu Stepper Driver (check to see if you need low or high voltage variant)- see your specific motor’s data sheet.

- 2 capacitors (100mf)

- Solid Core Wire

- Adafruit Proto Board

- Terminal Blocks (2×4)

- Barrel Jack

See Photo Below and Link for Wiring