Planet Wars

The idea of the project is to render fictional world of novel by using playing cards so that the content becomes interactive.

Planet wars is based on famous science fiction novel “Hitchhiker’s guide to the Galaxy” by Douglas Adams. It consists of 28 planet cards that have been described in the novel. Every card has a keyword that describes the key character of the planet or the people who live on the planet.

How to Play-

Two or more players shuffle and distribute the cards among themselves. Using the planet set that each player has they are supposed to create a story of how their planet would defeat opponent’s planet. This argument has to be counter argued by the opposite player. Whoever builds a better story wins.

How to see the fictional part and interact with the story-

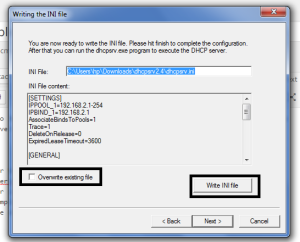

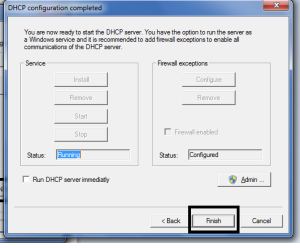

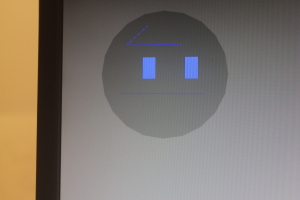

These cards are put below a tablet which has a custom made application.

Cards have the artist’s rendering of the fictional world. The winner of the round gets to tap on the 3D render of opponent’s planet and blast it.

Once the planet is blasted, it is dead and the card can be used anymore. the augmented object is not seen anymore.

Steps-

I tried multiple things before arriving at the final project described above. I think it’s important that I describe all the steps and learnings here-

1. I started with this idea of data sonification of an astronomy dataset. I looked at various dataets online and parsed a dataset of planet set and rise time from pittsburgh for a week, within pure data. After this step, I realised that I need to move to max msp in order to create better sound and more control on data.

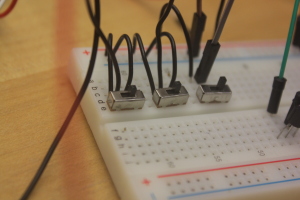

2. I started working with max msp, I set up max with ableton and kinect so that body gestures of a human skeleton can be tracked. This is the video demo of music generated when a person moves infront of kinect.

The music is currently changing in relation to hand movement.

3. After this step, I dived deep into finding the correct dataset for sonification. I came across following video and I read more about deep field image taken by hubble telescope over ten days.

Every dot in this image is a galaxy.

I was inspired by this image and I decided to recreate the dataset inside unity. Aim was to build the deep field image world in unity and using kinect to give the ability to move through this simulation.

Here is how it looks

4. I got feedback from the review that the simulation wasn’t interactive enough. Also, there wasn’t enough user experience and immersion. We also happened to visit the galley space where the final exhibit was going to take place. All of this made me realise that I should use all the skills I learned till this point of time to create something fun and playful. That’s when I thought about developing Planet Wars.

Learning

1. I learned to parse dataset in pure data and work with sounds

2. I got introduced to Max and ableton and made my first project work on both of these platforms.

3.The technology used for the final project is- Unity 3D game engine.

Leanings- I learned to render 3D objects inside unity. I learned to add shaders and textures on objects. I also wanted to be able to create particle systems which was part of creating the explosion animation/special effect. I learned how to make my own ‘prefab’, quality or set of features applied to an object that can be repeated for other objects inside unity. Also, I learned how to add gestural interactions like tapping on virtual objects to andriod apps developed in Unity. I worked with getting 3D sounds attached to explosions so that the sound is different depending on whether the user is near or far from the virtual object.

References and related work

1. Astronomy Datasets- Astrostatistics, NASA dataset

2. SynapseKinect

3. Crater project

4. LHC sounds

5. Data sonification

Ahead

From the feedback I received, I am planning to add more special effects during planet interactions and also more 3D objects than just planets- The spaceship and different artifacts used in the novel.