The Teletickle

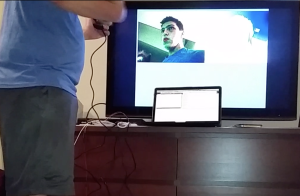

The Teletickle allows users to send and receive tickles using a custom web-app located at teletickle.com. Rather than sending and receiving texts, with the teletickle I wanted to enable users to send and receive a more sensorial and expressive message in the form of tickles. The system is meant to be experienced playfully, it is a device intended to make users laugh and enjoy the experience of being tickled and tickling. The monkeys in which the electronics are encolsed add to the playful and silly nature of the project which research has shown makes for a better tickling sensation. Further, the user who receives a tickle does not now when the motors that cause the tickling will be activated or which motors will be activated, the tickling is entirely controlled by the user that sends the tickle.

There is previous work done in this space, in particular this work took from the work of affective feedback done in the Human Computer-Interaction community en.wikipedia.org/wiki/Affective_haptics Specifically, the research findings that showed how the tickle is more effective when it is experienced randomly and when the design of the system is silly or humorous.

The project was built with a divide and conquer approach. Rather than trying to build the entire system, my approach was to build the pieces of the device and then connect them. There were two primary systems to build, the web application and the Arduino software to interpret audio signals. Each part of each of these systems was individually built, the design of the web app was separated from the server side of the web app, the communication between these parts was built using web sockets which was also independently developed. The interfacing between the Arduino and the web app was done through the audio signal outputted through the headphone jack of the phone, this was built and tested separately with a different Arduino program before being integrated. I think key to developing the project was building each part of the system separately, isolating parts to getting them to work before integrating them to the whole.

Some lessons learned: divide and conquer is a strategy that works well for me, scoping the project is essential to making sure you can finish it on time, haptic feedback needs to be hard pressed to the body to be felt, audio signals from the phone can be used to control anything using fast fourier transform.

Some references:

- en.wikipedia.org/wiki/Affective_haptics#cite_note-1

- Dzmitry Tsetserukou, Alena Neviarouskaya. iFeel_IM!: Augmenting Emotions during Online Communication. In: IEEE Computer Graphics and Applications, IEEE, Vol. 30, No. 5, September/October 2010, pp. 72-80 (Impact factor 1.9) [PDF]

- Chris Harrison, Haakon Faste. Implications of Location and Touch for On-Body Projected Interfaces. Proc. Designing Interactive Systems, 2014, pp. 543-552.